“I DON’T BELIEVE AI WILL REPLACE CHARTERED ACCOUNTANTS, BUT I DO FIRMLY BELIEVE THAT THOSE WHO UNDERSTAND AND LEVERAGE AI WILL REPLACE THOSE WHO DON’T.”

Some perceive AI as a big threat to the profession, while others perceive it as a big opportunity. Is it like seeing a glass half full or half empty, or does it have some deep nuances? What is in store for the CA Profession with the advent of AI? Can we ignore it, or do we have to embrace it? CA Ninad Karpe answers these and several other questions in an interview with BCAS.

Ninad Karpe is the Founder of Karpe Diem Ventures, which invests in early stage startups in India. He is also the Founder & Partner at 100X.VC, India’s pioneering early-stage VC firm that has invested in 180 startups through the innovative iSAFE note model. Widely known as a “startup whisperer” for his sharp insights and no-nonsense advice, Karpe earlier served as MD & CEO of Aptech Ltd. and as MD of CA Technologies India. Karpe has authored the business strategy book “BOND to BABA” and served as Chairman of CII Western Region (2017-18). Passionate about storytelling and creativity, he has also produced four Marathi plays, seamlessly blending boardroom strategy with the magic of the stage.

Being a lead technology person from the CA Fraternity, his insights on the AI revolution impacting the CA Profession carry weight. Considering his time constraints, BCAJ sent him questions to receive written answers from him. We hope this interview will enrich readers.

Q. Mr. Karpe, thank you for sparing your valuable time. Let’s begin by discussing the future. How do you see the role of a chartered accountant evolving over the next five years, especially given the rise of AI?

A. Ninad Karpe: Thank you, it’s a pleasure to discuss AI. We are currently witnessing a profound shift in the accounting profession. I don’t believe AI will replace chartered accountants, but I do firmly believe that those who understand and leverage AI will replace those who don’t.

In five years from now, the CA’s role will move away from being execution-heavy and compliance-focused toward something far more strategic and analytical. Much of the routine work, like data entry, reconciliation, standard reporting, etc., will be completely automated. But that only opens up space for CAs to deliver real value through insights, interpretation, and decision-support. Human judgment won’t become irrelevant. In fact, it will become more important, because it will be applied to higher-order problems. The AI-assisted CA will be the norm, not the exception.

Q. That’s a powerful vision. In your view, what’s the most underrated opportunity that AI presents to accounting professionals right now?

A. Ninad Karpe: That would be the ability of AI to make sense of unstructured data.

CAs are used to working with structured ledgers and financial statements. But what about the mountains of unstructured data, like emails, WhatsApp chats, handwritten notes, scanned invoices, or boardroom transcripts? AI can now process, analyse, and even summarise such data. That’s a goldmine.

Most firms are just scratching the surface by using AI for automating data entry or filling out forms. But the real breakthrough lies in using AI for strategic insights like flagging hidden risks, spotting patterns, and even predicting client behaviour. This capacity to derive intelligence from chaos is what can transform how CAs add value.

Q. Which AI tools do you find most effective for day-to-day accounting tasks? And how safe is it to use free versions of these tools?

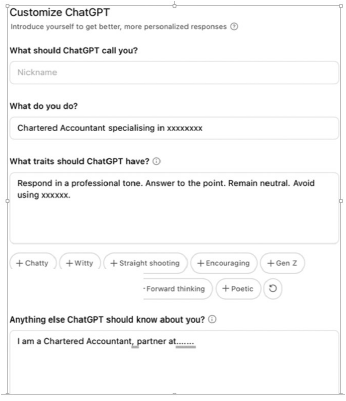

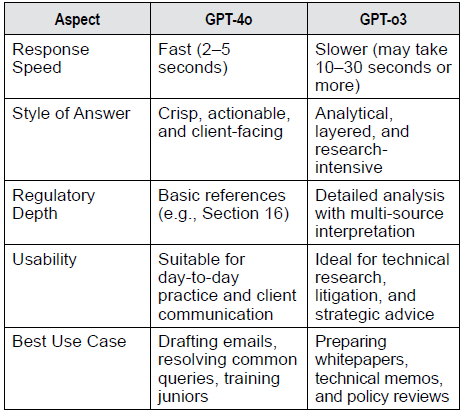

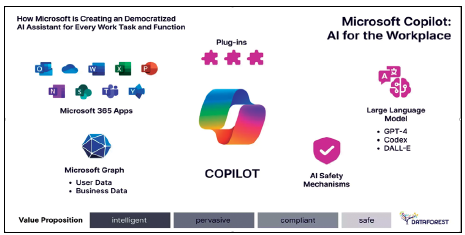

A. Ninad Karpe: For everyday use, tools like ChatGPT, Microsoft 365 Copilot, and AI-enhanced Google Sheets are quite useful. You can use them for summarising tax policies, preparing checklists, analysing trends, or even drafting emails and reports.

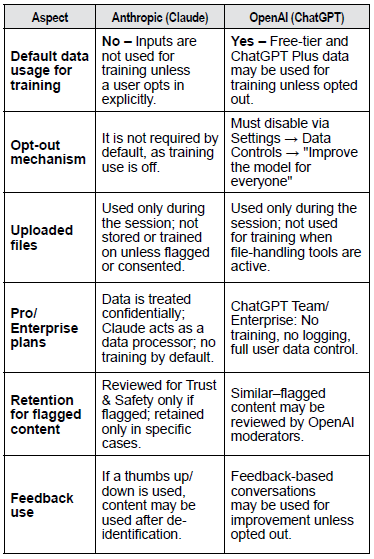

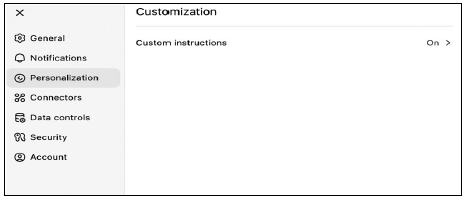

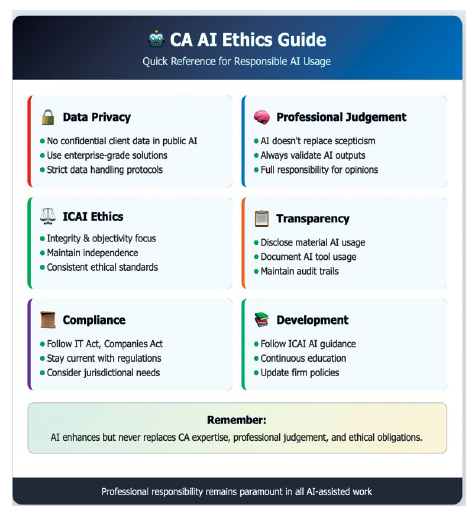

That said, I must stress that data sensitivity is paramount. For anything involving client data, free versions should be avoided. Use enterprise-grade tools that offer robust security, encryption, and compliance controls. Experimentation is great, and free tools are ideal for learning and prototyping. But when it comes to real-world applications, especially involving confidential financial information, always prioritise data privacy.

Q. How should mid-sized firms approach AI adoption? Should they prioritise investing in technology or focus on building talent?

A. Ninad Karpe: Definitely start with talent.

Technology can be bought, but talent needs to be nurtured. I always recommend identifying an “AI Champion” within the firm; someone who is naturally curious, digitally savvy, and willing to experiment. They don’t need to be a coder or a data scientist. But they do need to be open-minded and passionate about exploring new tools.

Start with one small use case, like automating invoice classification or generating audit checklists. Allocate a modest budget, say ₹5–7 lakhs, annually. That’s more than enough for a pilot program that could yield 10x returns in productivity and insights. The key is to build a culture of experimentation. Begin small, learn fast, and scale confidently.

Q. Can AI ever replace human judgment in complex areas like auditing or tax planning?

A. Ninad Karpe: AI can assist, but not replace human judgement.

It can definitely highlight inconsistencies, flag outliers, and run complex simulations. But when it comes to interpretation, especially in areas like tax law or regulatory compliance, human experience is irreplaceable. A CA understands nuance, ethics, and business context, all of which are beyond the capabilities of even the most sophisticated AI models today.

AI might be able to tell you what can be done. But only a human can determine what should be done. The “why” behind a financial recommendation, or the strategic judgment behind audit materiality, still lies in the human domain.

Q. That brings us to a critical concern. What are the biggest risks of placing blind trust in AI?

A. Ninad Karpe: One word. Hallucinations.

AI tools sometimes generate answers that are completely wrong, but sound perfectly plausible. That’s incredibly dangerous in our field, where accuracy is non-negotiable. If those hallucinated results make their way into a tax filing or an audit report, it’s not the AI that is held responsible; it’s the CA who signed off.

Another risk is outdated or irrelevant data. Many AI models are trained on publicly available data, which may not be current or jurisdiction-specific. So yes, AI is a wonderful assistant. But it needs constant supervision, especially in high-stakes accounting environments.

Q. How should firms maintain client trust while increasingly using AI in their advisory processes?

A. Ninad Karpe: Be transparent. Always.

Tell your clients how you’re using AI. Let them know it’s being used to support, not to replace, your professional judgment. For example, explain that the AI tool is helping cross-verify financial entries, scan for anomalies, or summarise reports, but the final call is always yours.

Clients appreciate honesty. When they see that AI enables better, faster, and more accurate service from you, they consider it a value addition. But if they suspect that you’re hiding behind the technology, that’s when trust breaks down. Transparency is not just ethical, it is strategic.

Q. Could you share a real-world example where AI truly made a difference?

A. Ninad Karpe: Absolutely. There’s a retail business I know of that was using an AI-based GST reconciliation tool. This tool flagged a recurring mismatch in filing entries, a pattern that manual checks had missed for months.

Because of that early detection, the company avoided a ₹15 lakh penalty. That one instance alone justified their investment in the tool several times over. It wasn’t just about speed, it was about precision, and about averting a regulatory crisis. That’s the real power of AI, when it turns data into actionable insight.

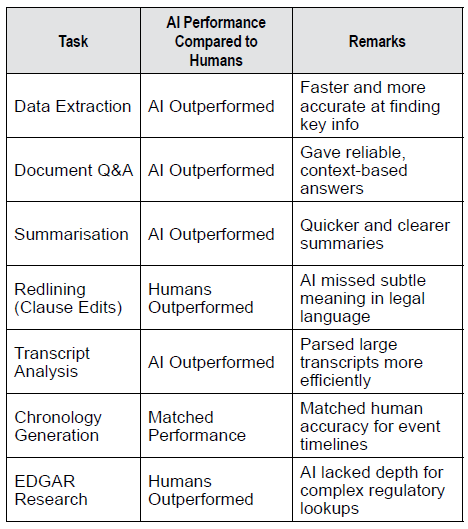

Q. Before implementing an AI tool, how should a firm assess whether the tool is reliable?

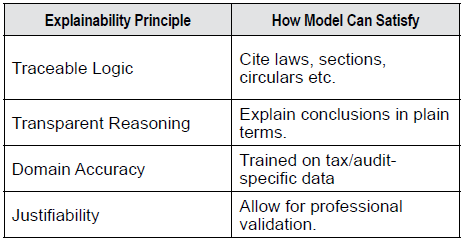

A. Ninad Karpe: Start with internal testing. Feed the AI dummy data and evaluate its outputs. Ask yourself: Do the results make sense? Are they consistent with domain knowledge? More importantly, can the AI explain how it arrived at those conclusions?

Any model that functions like a black box, where you can’t understand or trace the logic, is a red flag. In accounting and auditing, transparency is everything. Reliable AI doesn’t just give you answers, it gives you justifications. That’s what you want to look for.

Q. Is AI adoption creating a divide in the profession between tech-savvy CAs and traditional practitioners?

A. Ninad Karpe: Yes. And that divide is growing. But let me clarify, it’s not an age issue. It’s an attitude issue.

I’ve seen 50-year-old senior partners embrace AI with more enthusiasm than 25-year-old associates. The real difference is mindset. Those who see AI as a threat will struggle. Those who see it as a tool will thrive.

Being tech-fluent is no longer optional. Just like knowing Tally was essential 20 years ago, understanding AI tools is now part of the core skill set. If you’re not learning, you’re lagging.

Q. From a policy standpoint, what framework do you believe India should adopt to ensure ethical AI in finance?

A. Ninad Karpe: We need a national “Finance-AI Code of Conduct.” And this should be co-created by ICAI, regulatory authorities, industry leaders, and clients.

This framework should rest on four key pillars:

- Data Protection: Client information must be encrypted and access-controlled.

- Transparent Algorithms: Firms should understand and disclose the logic behind AI decisions.

- Usage Disclosure: Clients should be aware of how AI tools are used in service delivery.

- Audit Trails: Every AI-assisted output must be traceable and verifiable.

As AI advances, so must our ethical standards. We can’t afford to be reactive – we must be proactive in shaping responsible adoption.

Q. Finally, if you were a young CA starting your career today, how would you prepare for this AI-powered future?

A. Ninad Karpe: I would double down on two things: strong financial acumen and digital fluency.

Master the fundamentals of accounting standards, tax laws, and regulatory frameworks. That’s your core. But alongside that, become proficient with AI tools. Learn to prompt effectively, analyse outputs critically, and integrate these tools into your daily workflow.

Think of yourself as an “augmented accountant”, which is a blend of strategist, analyst, and tech interpreter. That’s not a futuristic fantasy. That’s the reality already unfolding around us. And those who are ready will lead the profession into its most exciting era.

Q. Any final concluding thoughts?

A. Ninad Karpe: As Chartered Accountants, embracing AI isn’t optional — it’s essential. But what sets us apart isn’t the ability to crunch numbers faster — it’s our judgment, ethics, and human context. AI may offer intelligence, but we offer wisdom.

So, the next time your audit file closes at the speed of light, just remember — behind every great AI is a greater CA… quietly debugging the logic, one ledger at a time.

Q. Mr. Karpe, thank you for this insightful and inspiring knowledge sharing. Your perspectives provide a roadmap for firms and professionals navigating the AI transition.

A. Ninad Karpe: Thank you. It’s been a pleasure to connect with BCAS Readers and share these thoughts. The future is not just coming. It is already here. Let’s embrace it.